Companies are embracing AI applications and leveraging a variety of data types (structured, semi-structured, and unstructured). In order for any AI system to add value both immediately and in an ever-changing environment, it must have high-quality data from which to build its models. In fact, selecting the right data for your ML application is probably more important than selecting the right model.

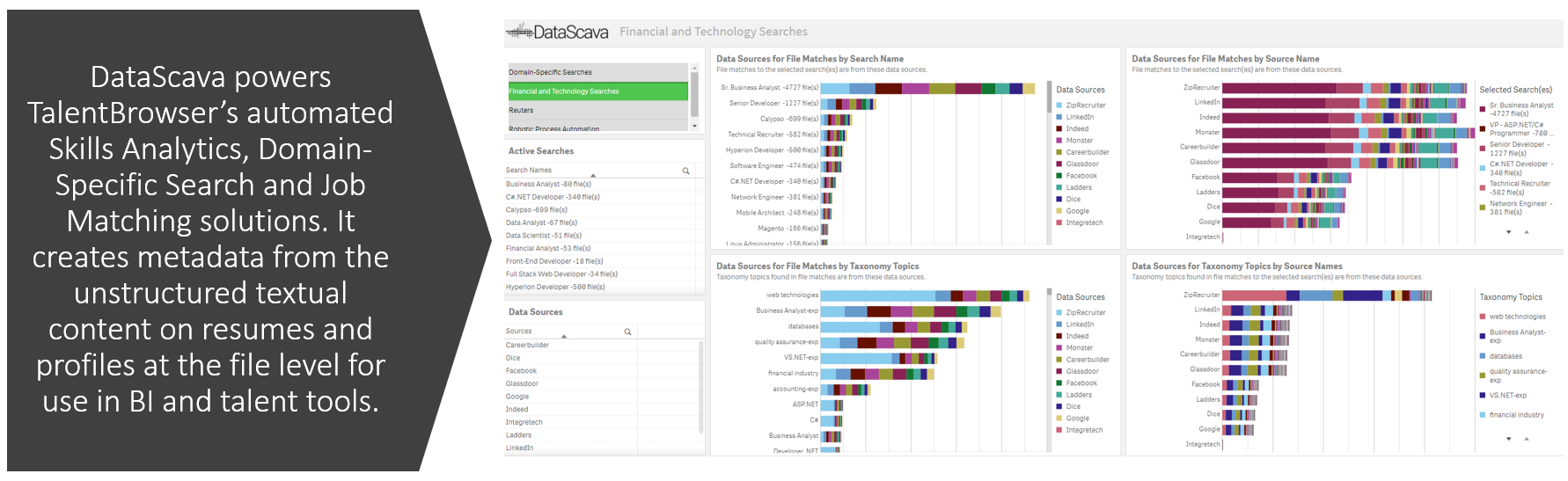

DataScava can help you partition, select, and accurately label the unstructured data you use to train your systems using your own business and domain-specific language that you define and control on an ongoing basis. This is the power of the DataScava approach.

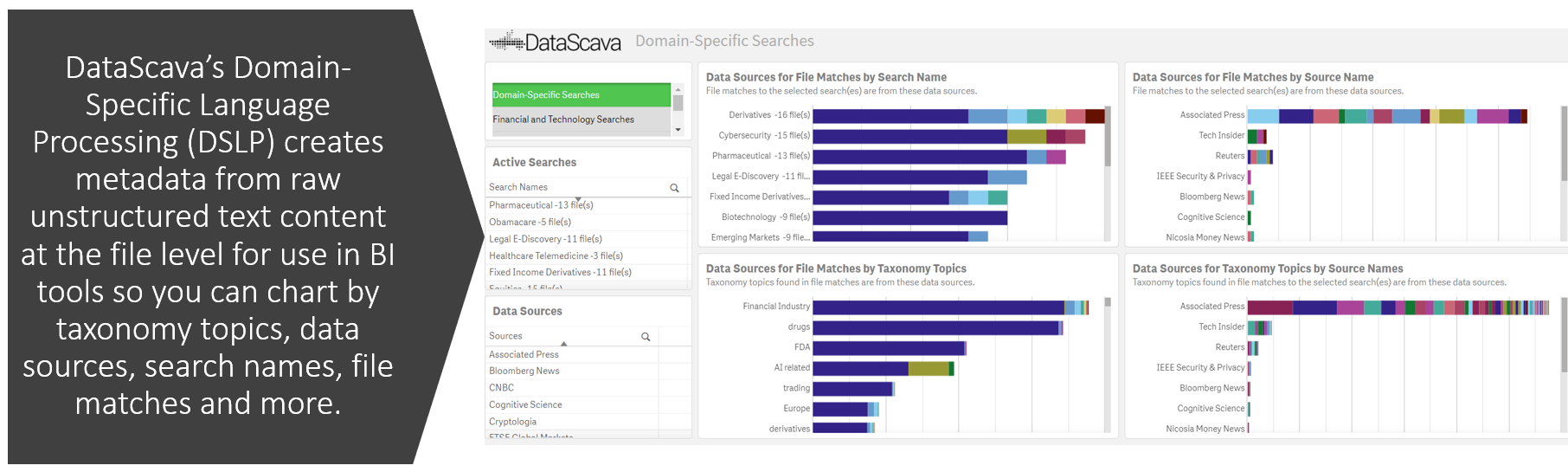

We employ three methodologies in mining unstructured text data for use in AI, LLMs, and Machine Learning, which generate value-added weighted topic scores and other metadata about raw text for use in other systems and charting. They work as a highly precise alternative or adjunct to Natural Language Processing (NLP) and Natural Language Understanding (NLU).

-

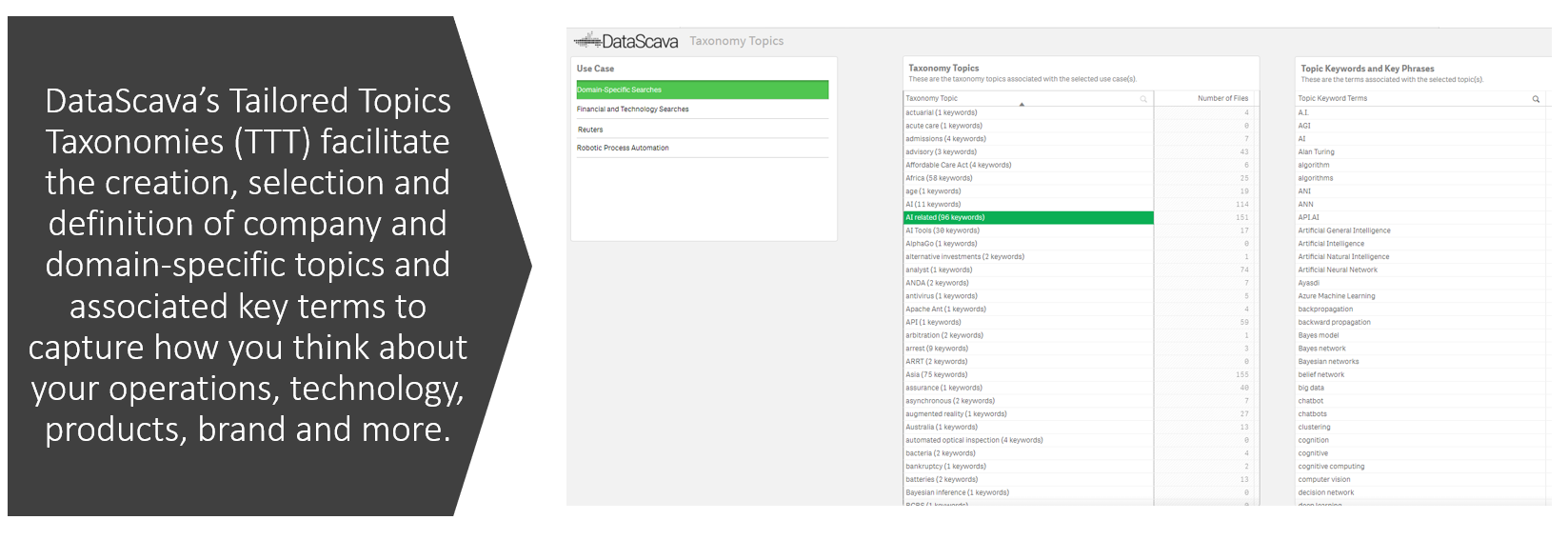

Tailored Topics Taxonomies model and capture features and topics within unstructured heterogenous text using specialized taxonomies you can select, ecit, create, and import to capture your business language and domain-expertise, allowing for the highly customized vocabulary and business logic necessary for accurate labeling and complex document processing.

-

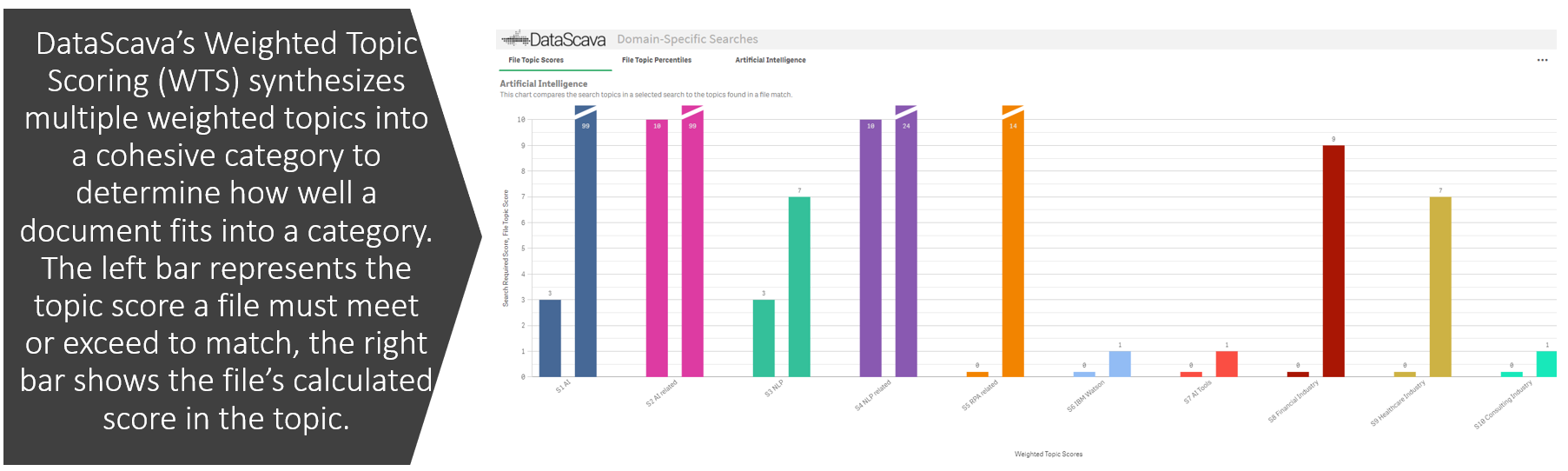

Weighted Topic Scoring accurately matches topics according to user-defined score thresholds, and labels documents into appropriate cohesive categories using heuristic techniques that are tailored to a specific business purpose (e.g., curating quality training data, filtering and routing emails based on intent and content, or finding the right resume for a job opening).

-

Domain Specific Language Processing precisely indexes and measures the wording of each document accurately based on the business context. It works at the file level to surface key results and relevant documents from large datasets, providing results you can see and control.

All of this means far less time spent by human experts doing manual labeling of individual documents, and more time spent identifying the key emerging problems that need to be addressed in the data taxonomy as the business environment changes, or unforeseen problems emerge with AI document classification.

DataScava commissioned a series of articles from Scott Spangler, former IBM Watson Health Researcher, Chief Data Scientist, and author of the book “Mining the Talk: Unlocking the Business Value in Unstructured Information,” in which Scott discusses how and why DataScava’s patented precise approach to mining unstructured text data perfectly complements real-world big data applications in AI, LLMs, ML, RPA, BI, Research, Talent, and BAU applications. He also contrasts our Tailored Topics Taxonomies, Domain-Specific Language Processing, and Weighted Topic Scoring methodologies with standard approaches such as NLP.

In “Machines in the Conversation: The case for a more Data-Centric AI” (excerpts of which were reprinted in CDO Magazine), Scott discusses his views on the latest developments in generative AI, and argues that too much focus on generative AI distracts from the important value a more Data-Centric AI approach can provide to business applications. He then discusses the key technologies we use that enable such an approach within the organization:

- Topic models which reflect the primary areas of focus;

- Flexible topic scoring to encode the organization’s priorities;

- Customized text processing that mirrors the way people actually communicate in the industry

Here’s an excerpt:

“High Accuracy and a Low Level of Expert Intervention

By focusing on the quality of the incoming training data stream, the business can ensure that the machine learning algorithm used continues to perform with high accuracy and a low level of expert intervention. This reduces wasted time for both the business and its customers.

AI Research organizations would do well to spend fewer resources generating new content with AI and more resources on figuring out how to accurately and sustainably ingest existing content in a way that makes us all able to do our jobs better.

Tools like DataScava help provide a platform for human-machine partnership which furthers creativity rather than just mimicking it.”

“Executive Q&A with Scott Spangler: DataScava, and ML”

In this Executive Q&A Scott discusses how and why DataScava’s patented precise approach to mining unstructured text data perfectly complements real-world big data applications in AI, LLMs, ML, RPA, BI, Research, Talent, and BAU applications. He also contrasts our Tailored Topics Taxonomies, Domain-Specific Language Processing, and Weighted Topic Scoring methodologies with standard approaches such as NLP.

Here’s an excerpt:

Scott, why don’t you what you think are the shortcomings of the current way most businesses handle big data?

I believe businesses are in danger of giving too much critical decision-making power to machine learning algorithms. Don’t get me wrong, I’m a big fan of these approaches. Using the latest Deep Learning technology, computers can now take far more information into account than a person could ingest in a lifetime, performing some specific tasks with an accuracy comparable to the best experts in the field, all while being trained in a fraction of the time. With the exponential explosion of unstructured information that has occurred in the past decade, the need for machines to ingest and use all that data is not only desirable but essential.

Nevertheless, it’s naïve to think that raw information by itself is enough to teach a machine how to behave over time, independent of business goals, dynamic environmental conditions, and the competitive landscape.

So what sort of solution do you advise?

I think machine learning algorithms need to be one part of a more holistic framework that includes user-controlled domain-specific ontologies, statistical analysis, and rule-based reasoning strategies. These are the basic ingredients that a tool like DataScava provides.

Why is that so important?

When we ingest a training set using a Machine Learning algorithm without due diligence, a host of unintended consequences may result: Training Bias, Labeling Errors, Brittleness, and Lack of Explainability, just to name a few of the more obvious problems that businesses are encountering today.

For those in our audience who are new to this area, can you explain a little more about what you mean by “Training Bias”?

Sure. Training Bias is another way to say that a deep learning algorithm is only as good as the input it is given, and if that is skewed either by the limitations of the data collection process or by accidental areas of missing coverage, then the model produced may have critical blind spots. Even when provided with examples that illustrate the problem, most algorithms will not adjust their results to account for a small minority of wrong outcomes.

So the old adage, “Garbage in, garbage out” still applies?

Exactly. And the same goes for labeling errors. Historical training sets that are labeled by human experts may incorporate the unconscious prejudices that those experts may hold. As an example, an expert hiring manager may not see himself as gender-biased, yet he may systematically and unconsciously route the resumes of female applicants to less senior roles in the company. Machine learning systems may learn this pattern from the labeled training data obtained from this hiring manager, thus codifying what were once purely personal prejudices.

And sometimes training data may not even be labeled by subject matter experts.

That’s true. Very often it falls to the Machine Learning team to label the data, and they may lack the necessary domain knowledge to do it correctly. So there are many reasons we need better tools to regulate the data labeling process to ensure the organization’s best practices and goals are incorporated.

I can see how legacy data sets could keep in place legacy problems. But you also mentioned Brittleness, is that also an issue with training data?

In a sense, it is. Training data that is obtained without regard to the underlying problem scope will frequently cluster in certain “popular” areas of the feature space. Other potential, but less frequent feature combinations may have insufficient data to be properly modeled. This causes training models that are brittle under real-world conditions.

Can you say more about what you mean by “feature space”?

Sure. A feature is essentially one level up from a word. An example would be a word and all of the other words that mean the same thing—the synonyms. A feature space is all the different features that occur in a data set that gets measured by our learning algorithm. There are different methods for doing this, but fundamentally this is how a piece of text gets translated into a meaningful numeric representation. Of course, how complex the feature translation is, affects the explainability of the algorithm results.

And the problem of Explainability—why is that so important? If the algorithm is right, do we need to know why?

Not in every case, but frequently we do. The thing is, a reasoning process that is inexplicable is also difficult to debug when it goes awry. If all you can do to fix a problem is add or remove training data, there is no guarantee that even if the error temporarily vanishes, it won’t crop up again the next time the training set is updated.

And so, how does a tool like DataScava help address all these issues?

By providing tools for capturing the key underlying topics and rules that govern important concepts of the business needs, it evens the playing field so that Machine Learning no longer has to have the final say in critical business decisions.

This is much more than just straightforward Natural Language Processing (NLP). Processes like DataScava’s Domain Specific Language Processing (DSLP) and patented Weighted Topic Scoring (WTS) enable the organization to construct customized filters that reflect how the best human domain experts conceptualize the important issues.

DataScava can supervise the process based on human-provided expertise and determine which data to use for training and which to avoid, as well as in what situations to trust Deep Learning decisions and when to fall back on more rule-based approaches.

So it’s not an either/or proposition? You can use both DataScava and Machine Learning to solve big data problems more effectively?

That’s right. It’s very similar to the architecture of the human brain. The two halves of our brain pay attention in different ways. The right half lives in the moment, taking in real-time sensory data and responding to it in an instantaneous and intuitive fashion that is mostly inexplicable to consciousness. The left half of the mind is more detached and abstract, conceptualizing the universe in terms of intentions, reasons, agents, and consequences. The right half reacts and motivates, the left half reflects and strategizes.

Today’s Deep Learning systems mimic the right half of the mind. They represent compiled knowledge that reacts based on learning from past examples how to respond to new input. But in nature, such a single-minded approach lost out to the two hemispheres that we see in most animals today.

In much the same way, the modern business environment requires more flexibility than AI systems alone can provide. There needs to be a parallel and complementary approach to decision systems that takes a bigger picture view of what is known about the data universe.

And that’s where DataScava comes in?

Exactly. DataScava—in partnership with a trained human mind—can act as a tool for giving the left brain an equal say in big data decision-making tasks.

Scott, I know ontologies have always been a particular focus of your research. What role do ontologies (or taxonomies) play in the process of big data analysis?

You need ontologies to maintain awareness of an ever-changing data landscape. Data Science incorporates processes such as regression, classification, clustering, dimensionality reduction, and machine learning. These processes represent a kind of toolkit that today’s practitioners employ whenever they face a business problem to solve using information available in a big data set.

But this toolkit lacks a fundamental component that is critical in most real-world settings if the solution obtained is to be practical, accurate, persistent, and transparent: This missing element is an Ontological Toolkit to go along with the familiar data science tools.

For DataScava, this is represented as Topics and Key Terms in Tailored Topics Taxonomies, which are leveraged in its Weighted Topic Scoring methodology— tools that enable mapping the big data set to the real-world issues that are to be addressed in solving the business problem.

To take a concrete example, say our big data set is resumes submitted to job openings, and the business problem is mapping those resumes to job openings. Here, the ontology of domain-specific DataScava Topics would be the different industries, job roles, skills, experience, location, and other attributes, and the features would be the keywords and key phrases relevant to the topics.

I see. So the ontology is a kind of Rosetta Stone – a way to translate between data and business requirements.

That’s right. Scientists have used ontologies for centuries to make sense of the data they collect. In business, they can represent the key expertise and processes that allow a business to function and out-perform its rivals in its chosen market. If we don’t capture these ontologies and use them as part of our big data analytics, we risk creating processes that systematize past bad decisions while making the business less flexible. In the long run, without ontologies to guide our thinking, we risk model stagnation over time, thus losing our competitive edge.

DataScavaprovides a practical, easy-to-use toolset for capturing the critical business ontologies that provide the bridge between unstructured data analysis using standard data science techniques and the human expertise that gives your business its competitive edge.

But if ontologies capture what you already know about your business, how do you become aware of new, emerging trends and issues?

It’s true, ontologies help identify and catalog the known, but our knowledge of what’s going on in and around our business will always be incomplete. Therefore, ontologies will always have some missing elements, some uncatalogued or miscellaneous classes.

Statistics and data visualization strategies help guide us in identifying new categories in our data, or in discovering new connections between existing categories that provide insight into potential opportunities to exploit in the marketplace or by improving internal processes.

For example, a strong correlation found between the occurrence of two unrelated categories in an ontology may indicate a cross-selling opportunity in sales or a preventive maintenance opportunity in customer service. Similarly, trend and bar charts graphing multiple categories over time may help the analyst uncover ways in which the dynamic business environment is changing, allowing problems to be discovered before they cause irreparable damage to customer satisfaction or loss of market share.

By producing numeric score measurements of what the raw unstructured textual data contains in the typical structured data format used by business intelligence and data visualization tools, DataScava Indexes enable a world of analysis not possible using NLP, Machine Learning, or AI. On its own, this metadata can support many of the applications intelligent systems are used for.

That sounds like a powerful way to make sense of what’s happening. But how do you respond once something is detected?

If ontologies are the nouns of our business vocabulary, then rules are the verbs. Rules connect the dots to complete the picture, enabling the business to take action.

The basic form of a Rule is a conclusion follows from the premise.

We have identified an inferred property, and the Rule makes that inference explicit and computable.

But isn’t that similar to machine learning – classifying based on learning from the past? Are these rules written by humans or by algorithms?

Both. Deep learning and rule-based inference can do similar tasks with the same input—identifying and classifying data elements. It’s important not to see these two methods as competing approaches, but as complementary. As the two halves of the human brain, they each do well where the other is deficient.

A deep learning algorithm maps input to output in a very comprehensive and systematic way — a way that captures how things work most of the time — having no conscious knowledge of why they work that way. A rule-based algorithm captures the underlying reasons and causes behind the way things work while always being bound by the limits of conscious understanding.

So one could imagine both machine learning and DataScava classifying the same input but in very different ways? Would they ever disagree?

Of course. When a deep learning system and DataScava agree on a classification, that’s ideal because then we now have a plausible explanation for why the deep learning algorithm decided the way it did.

When they disagree, it could mean that the rule base is incomplete and needs augmenting. Or, it may mean that the deep learning algorithm is lacking training data near the data element, or that something has changed to make the original model generated from the training set stale or outdated.

In any case, it’s an opportunity to make improvements over time and help real-world big data systems to be effective in the long run.

So, in summary, it’s a team effort, not a competition.

Yes. In the final analysis, no single algorithm, no matter how sophisticated, can replace the flexibility and adaptability of human judgment to respond to dynamic business challenges. For the foreseeable future, adjunct tools like DataScava will continue to play a crucial role in allowing data scientists to build flexible architectures that can adapt and be maintained over time to ensure the reliability of present results and continuous improvement over time.

In his “Who’s in Charge of Your Business: The Humans or the Machines?”, Scott discusses:

- The pitfalls of using a fully automated approach to critical decision-making;

- The desirability of having a parallel human-machine partnership that regulates and monitors the inputs and outputs of automated approaches;

- The three basic ingredients that are needed to make that hybrid process successful and how DataScava implements each of these components.

“Algorithms will be more effective in the long run if they are part of a more holistic framework that includes user-controlled domain-specific ontologies, statistical analysis, and rule-based reasoning strategies. These are the basic ingredients that a tool like DataScava provides.”

In his most recent article, “Machines in the Conversation: The Case for a more Data-Centric AI,” Scott shares his views on the latest developments in generative AI. He argues that too much focus on generative AI distracts from the important value a more Data-Centric AI approach can provide to business applications.

He then discusses the key technologies that we use that enable such an approach within the organization:

- Topic models which reflect the primary areas of focus;

- Flexible topic scoring to encode the organization’s priorities;

- Customized text processing that mirrors the way people actually communicate in the industry.

Our Approach

Domain-Specific Language Processing (DSLP)

Weighted Topic Scoring (WTS)

Tailored Topics Taxonomies (TTS)

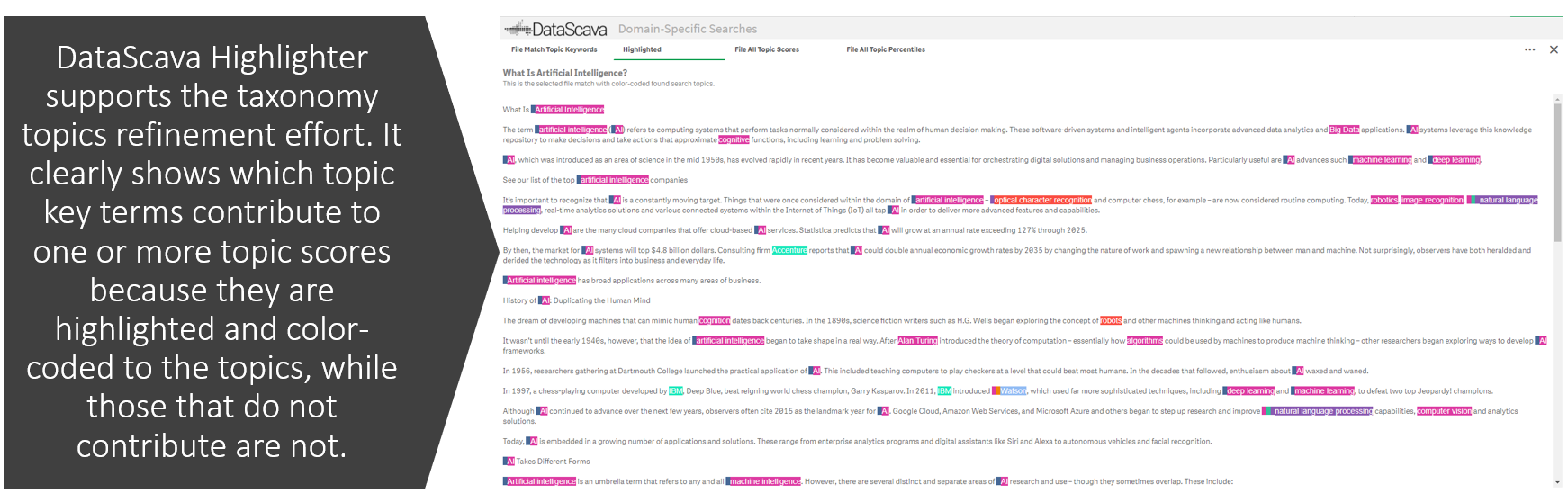

Highlighter

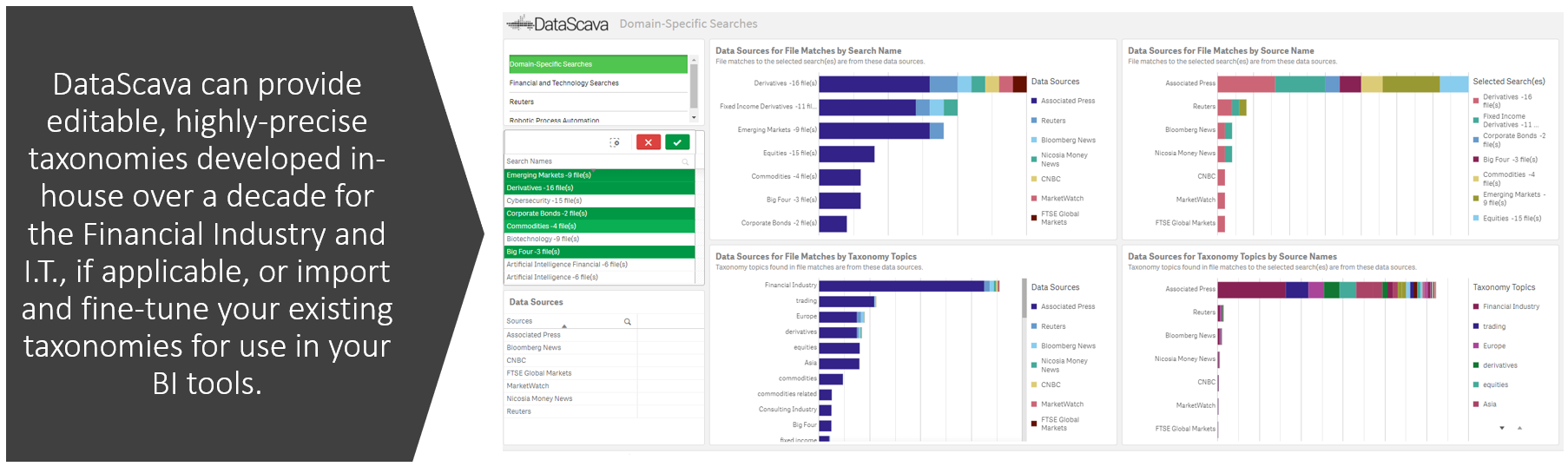

Taxonomies for Financial and IT Domains

Taxonomies for Talent Matching and Skills Analytics