Our Approach is Fine-Tuned to Your Needs

Companies use AI, LLMs, Machine Learning, RPA, Business Intelligence, NLP, and NLU to try to make unstructured text data more accessible, understandable, and actionable. A key first step is to identify highly precise subsets of documents with relevant context and content for use in training data, analysis, and automation.

Up to 80% of their efforts are spent in this time-consuming process — finding, cleaning, and reorganizing huge amounts of messy data — because these systems require accurate input to ensure they don’t use documents about “viral Tweets” if their focus is scientific research about “viral infections” like COVID-19.

But mining unstructured text data isn’t simple. To work effectively, a solution must be fine-tuned to meet your specific organization’s needs and address the quirks in your textual content. DataScava helps you derive value from your data using our proprietary methodologies and your business language and domain expertise, keeping the Human in Command.

Personalized Criteria You Control

NLP and NLU analyze words, then phrases, sentences, and, if you are lucky, entire paragraphs. It’s a bottom-up approach that processes information you hope leads to relevant output.

DataScava does not interpret input data, disambiguate natural language, or infer what you’re looking for — it measures and finds what you are looking for. It shows a graphical and numeric representation of it, akin to an oscilloscope in electronics, and this informs the data discovery process by providing corpus-wide measurements of each topic of interest.

Armed with these precise measurements, users gain great insight into their unstructured textual data based on personalized criteria they control.

Accurate, Visible, and Designed for Constant Improvement

Business applications for today’s data-driven systems are designed to make recommendations, route data to a defined destination, and/or trigger a process. These tasks have a set of predefined triggers you have trained it to react to. DataScava can accurately route and trigger while providing insight into the process.

Some automated systems require a high degree of precision and auditable results. They cannot be exactly right “most of the time,” and mistakes can be disastrous. And while they can handle complex inputs quickly, one barrier we have learned that can be a challenge is when they are provided with “multi-intent” input but only react to a single primary intent.

Our solution supports “not” that provides any required complexity for multi-intent applications (E.G. “a” infers “b,” unless “c” or “d” is present, in which case it means “e” if “f” is not present, in which case it means “g” . . . ).

DataScava is designed for constant improvement but allows the whole iterative process to happen as a matter of course. Because of the accuracy and visibility mentioned above, tweaking the model becomes simple and obvious.

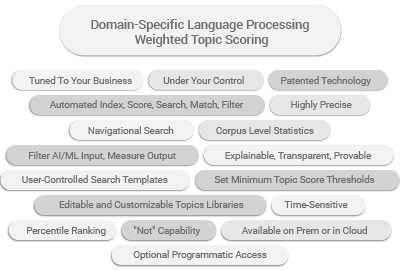

Domain-Specific Language Processing and Weighted Topic Scoring

DataScava helps bridge those gaps by ensuring that input is relevant, thus improving the quality of results while reducing the risk of inappropriate analysis and badly informed decisions. All the while, it increases your business and data teams’ efficiency.

Domain-Specific Language Processing (DSLP) and patented Weighted Topic Scoring (WTS) leverage users’ subject matter expertise and their own business and domain language to identify precise information and present it in context.

Because they enable both non-technical and technical people to capture the abstract topics, themes, and nomenclature that represent their own business, they are a powerful adjunct or alternative to NLP, NLU, Semantic, and Boolean Search.

Why We Don’t Use NLP or Semantics

Although they are powerful and versatile technologies, NLP and Semantics proponents with real-world experience will acknowledge that NLP is hard and complicated to set up and use. In general, NLP is useful for relatively simple tasks, such as automating a phone attendant’s call routing or a chatbot’s responses.

AI can take it a step further, but it certainly doesn’t summarize an entire document or provide any means to compare, measure, and filter so users can view and adjust how they output in normal use.

In addition, business use cases for AI solutions for large bodies of textual data largely focus on routing documents to a particular destination and/or taking some action based on their contents (initiate a process, set an alert, send an email). This requires summarizing the document overall.

DataScava works at the document level, summarizing textual content in a usable, numerical form for routing purposes or to trigger an action using a process that is adjustable by users.