Mine Messy Unstructured Text Data

Experts estimate that as much as 90% of the digital data generated daily is unstructured. This includes emails, documents, incident reports, text files, videos, contracts, customer chats, audio files, social media, presentations, blog posts, resumes, profiles, photos, and more. Identifying precise and relevant information from this daunting volume of data is challenging to realize business value.

DataScava is an unstructured data miner built on patented matching technology that generates metadata at the file level about unstructured text and searchable document indices. It uses your own business and domain language to help you identify the high-value data you need to use with applications in AI, LLMs, Machine Learning, RPA, Business Intelligence, Talent, Research, BAU applications, and more. Use it to automatically classify, curate, search, filter, match, tag, visualize, label, and route raw textual content in real time to unlock its value.

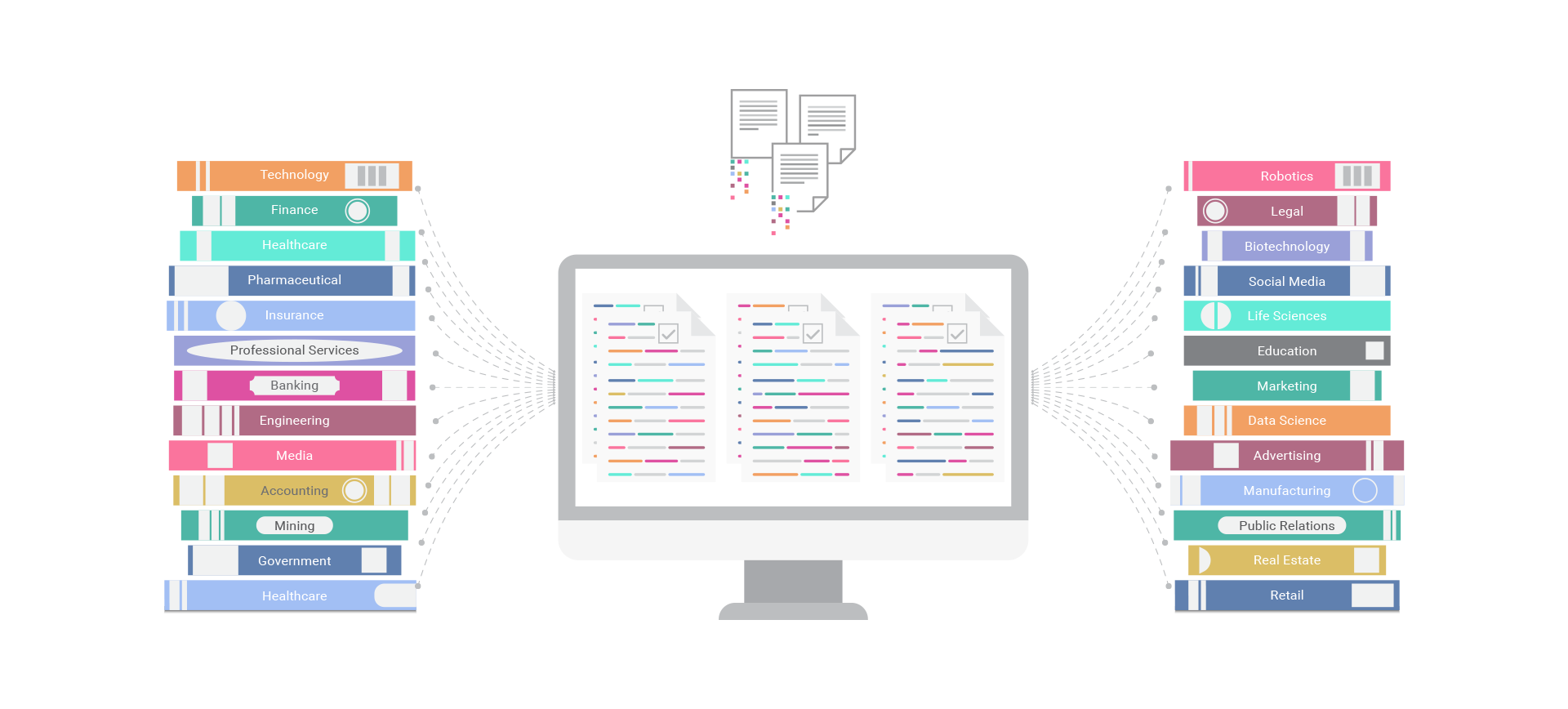

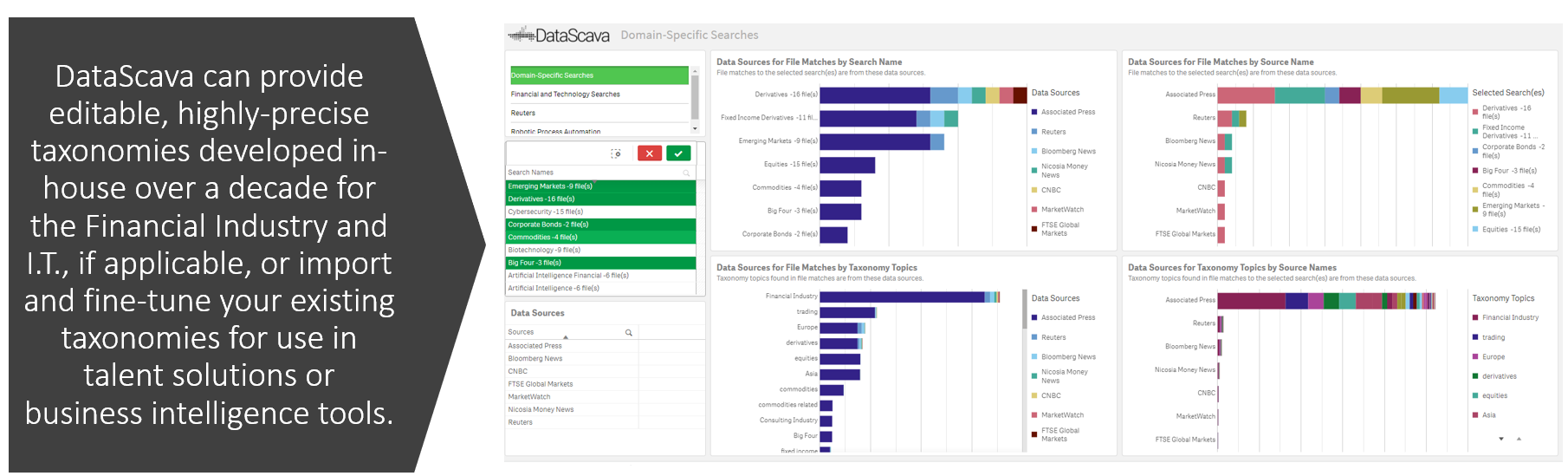

It can help you find and filter the most relevant documents or information from large unstructured datasets or raw text snippets and mine unstructured text based on source, content, intents, interests, and other criteria you define, control, and weight. Our tools can be shaped and molded to fit each organization’s unique data universe and problem space. DataScava can also provide pre-configured mature taxonomies for the Financial and Technology domains.

Our deployment model can be:

- On-prem (our process reads from the database and writes an index back to it).

- Off-prem (we host the data, stored with the indexes in an AWS cloud database)

- Or via a REST API GET call from an on-prem process to retrieve index values.

Our Approach

DataScava helps you model and capture features and topics within heterogeneous text using specialized taxonomies you can select, import, create, and edit in real-time to define your business language and domain expertise, allowing for the highly customized vocabulary and business logic necessary for complex document processing.

Our text mining algorithms generate metadata about raw textual content at the file level using Tailored Topics Taxonomies, Domain-Specific Language Processing, and Weighted Topic Scoring methodologies, which provide a highly precise alternative to Natural Language Processing (NLP). They work together at the file level to surface key results and the most relevant documents from large datasets—in context.

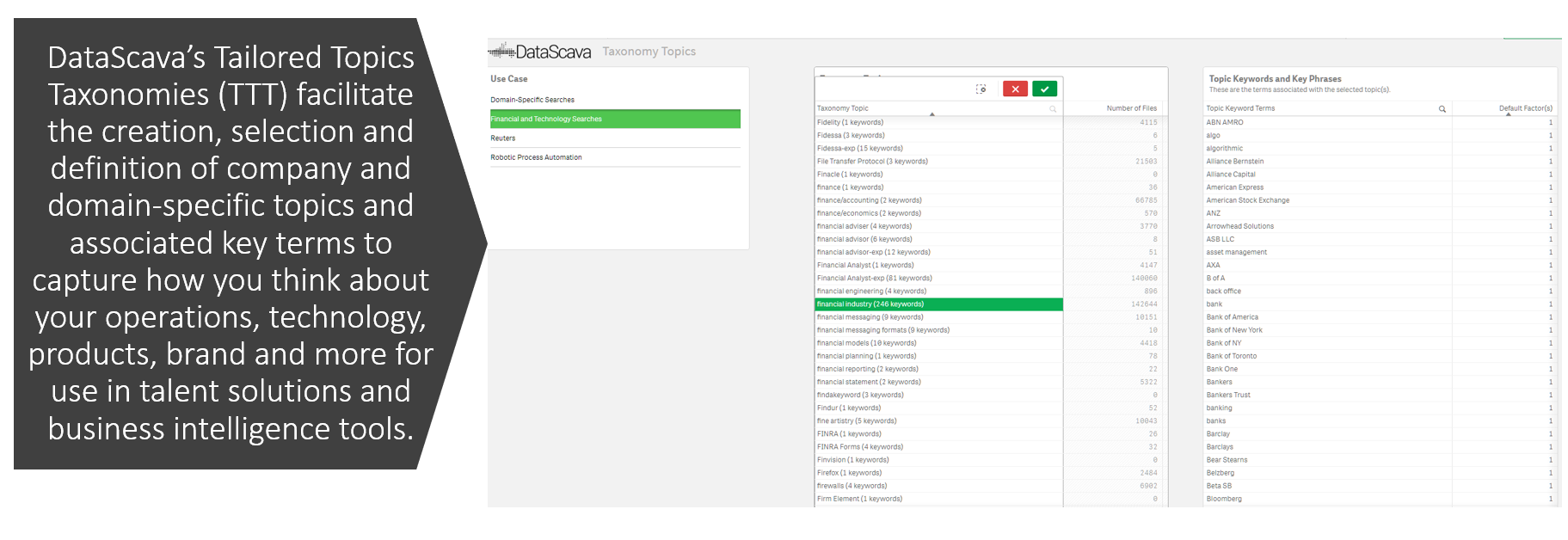

Tailored Topics Taxonomies (TTT)

TTT facilitates the selection, creation, and definition of your organization’s domain-specific topics to capture how you think about your operations, technology, products, and brand. Users can create and modify new topics in real time without any new programming. Topics can have unlimited keywords and key phrases associated with them based on your needs. For example, our Financial Domain topic has over 250 editable keywords and key phrases associated with it. We can provide pre-configured TTT for the financial and I.T. domains.

Ongoing refinements and exceptions are handled by users adding, deleting, and editing topics and/or their key terms. For example, the topic “Viruses” in the medical domain would have the key term “Covid-19,” but in the IT domain would not. Over time, DataScava Topics are continually refined in a measurable way at the direction of users to produce ever-increasingly accurate results and eliminate incorrectly indexed textual content.

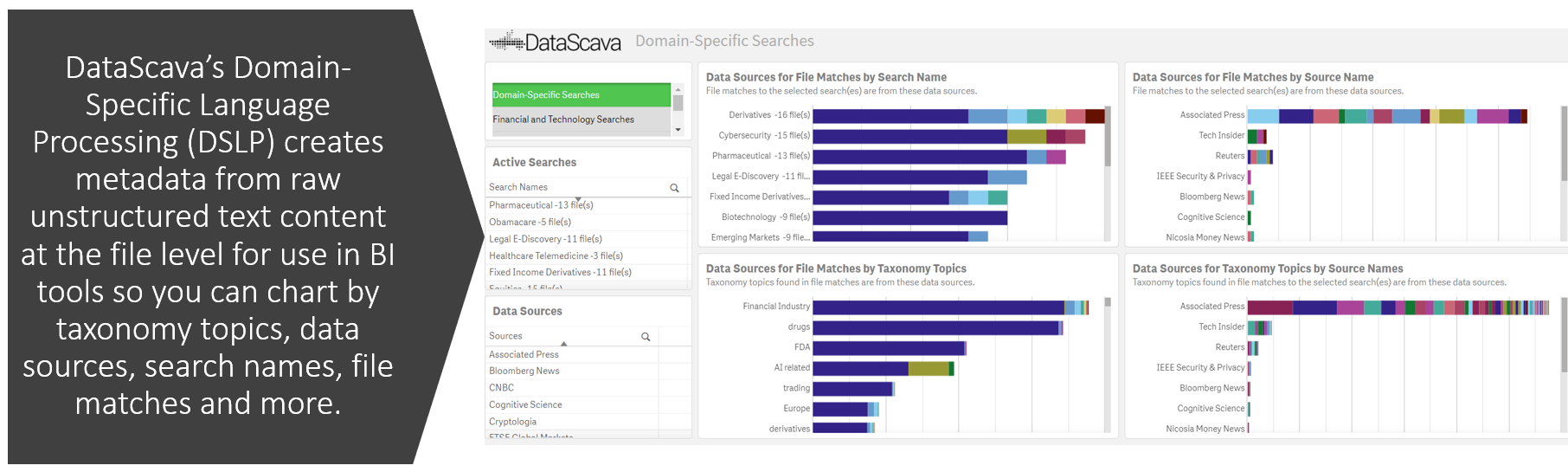

Domain-Specific Language Processing (DSLP)

Unlike NLP, DSLP does not use all word forms or filter out stop words when indexing and searching for key terms associated with your taxonomy topics — it uses the exact keywords, key phrases, and acronyms that are specified in your TTT. There is also a “not” capability (i.e., the topic “Summit”, a financial software product, will not find “Summit, New Jersey,” or “Summit, N.J.” or “Summit, NJ”). You can also require exact title case matches and set default score minimums or maximums and key term factors for Weighted Topic Scores.

DataScava Indexers use DSLP to generate metadata at the file level that precisely measures topic frequency in the raw textual content. These scores do not use NLP functions such as fuzzy search, stemming, stop word removal, POS tagging, or lemmatization. Only textual data that contains topic key terms gets a score in the topic; textual content that does not mention a topic key term is not indexed.

By producing numeric score measurements of what the raw unstructured textual data contains in the typical structured data format used by business intelligence and data visualization tools, DataScava Indexers enable a world of analysis not possible using NLP, Machine Learning, or AI. On its own, this topic scores metadata can support many of the applications for which intelligent systems are used.

Context is Key

Context is key, and DSLP, WTS, and TTT work together to achieve it through our patented “Profile Matching of Unstructured Documents,” named after a carpentry tool used to model complex shapes that can’t be measured linearly. Customizable company and domain-specific WTS templates use weighted topic scores metadata generated by DataScava Indexers to define the precise context to any degree of complexity and depth.

Proponents of NLP contend that it “knows” what language means or intends, but that’s a massive challenge within specialized domains. DataScava does not program a machine to learn; it trains the machine to categorize complex human logic into domain-specific use cases. Think about how difficult subject matter expertise is. An SME can think, “I want either a lot of A or B or maybe C if D is present, but I don’t want anything that includes F or G unless there’s H, but if I have A and C, the FG exception is less important as long as K is there.”

Weighted Topic Scoring (WTS)

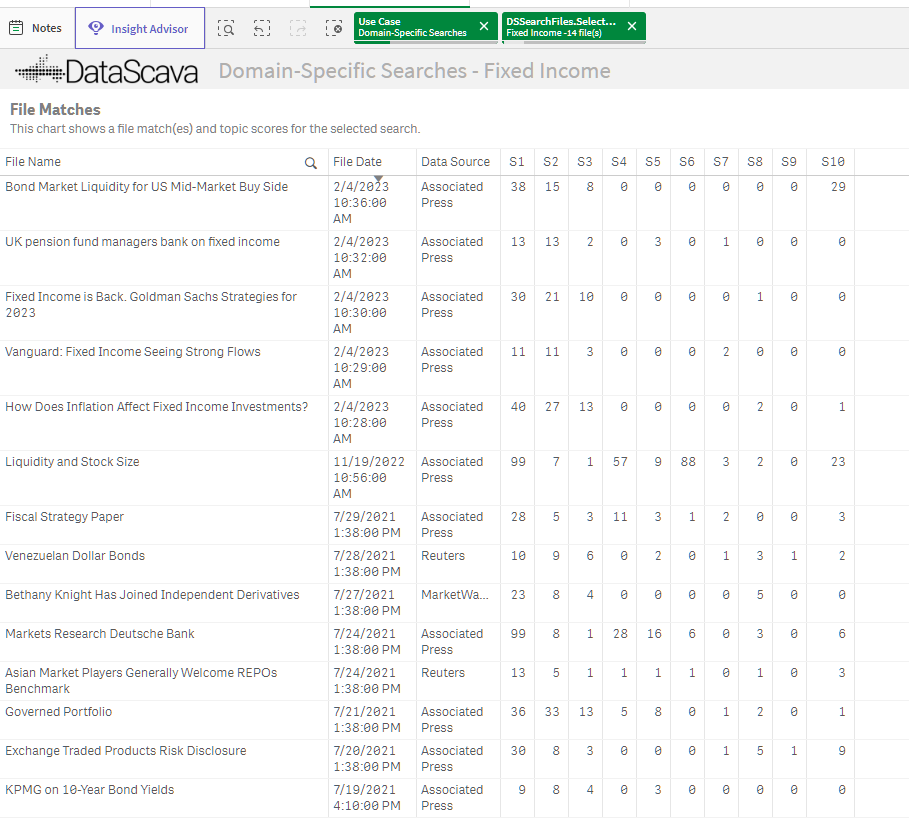

Beyond the topic level, DataScava provides another level of abstraction, a proprietary method called Weighted Topic Scoring, to synthesize multiple topics into a cohesive category and score and rank them to determine how well any given document fits into the user-defined category. WTS uses your customizable user-specific Search Templates to classify, filter, tag, match, and route your existing and incoming unstructured data automatically 24/7.

Users “set the bar” to be met in each of their required/desired/not desired weighted topics based on their own objectives, areas of interest, priorities, and more. To be a Match to your Search Template, files must meet ALL of the defined score thresholds in EVERY defined topic. Files that may match a one-dimensional Boolean search but lack your desired depth in one or more topics are filtered out.

DataScava returns a list of File Matches you can multi-level sort by topic(s), data source, file date, and more. The system continues to do so for as long as the search is marked active in DataScava. When searches are made inactive, they and their File Matches are stored in your DataScava for future use. Search Templates can be created, copied, and modified in real-time by users on the fly without any new programming.

With WTS, users select, prioritize, and segment “required” and “nice-to-have” topics, setting minimum score thresholds in each and ranking the output. WTS only matches files that meet or exceed all required topic scores. Non-technical users can adjust the dynamic BI and use DSLP and WTS to add or edit topics and key terms on the fly, and home in using multi-level sort and other criteria. WTS search filters work continuously to mine new textual data as it arrives and route output to downstream systems.

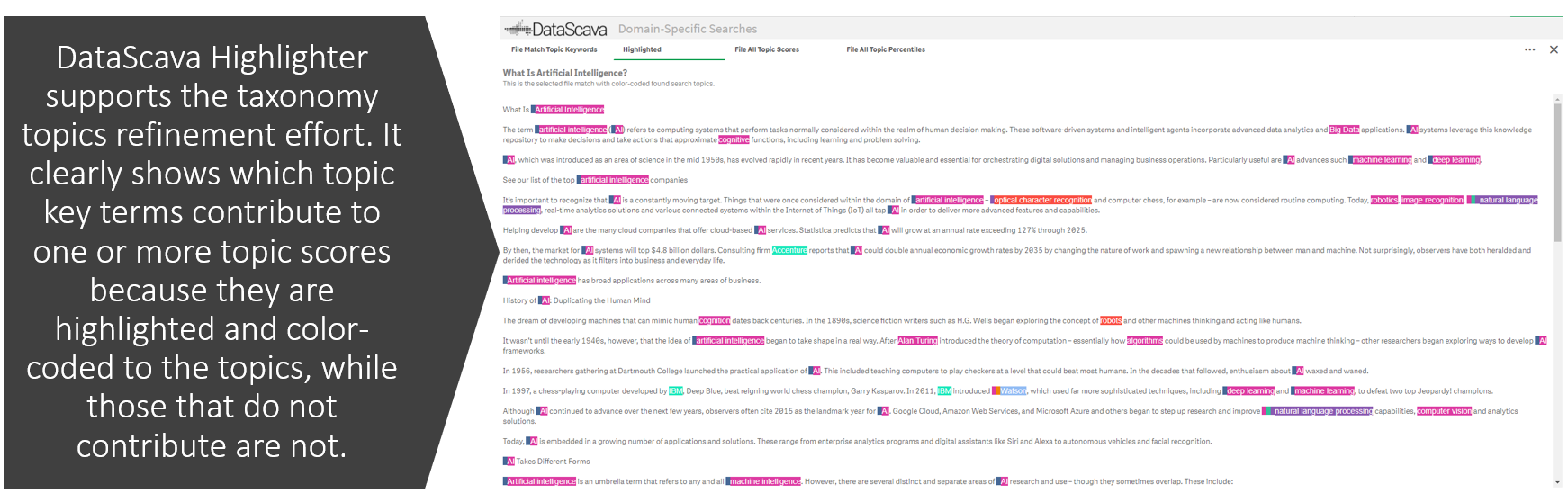

Highlighter

DataScava Highlighter supports the taxonomy topics refinement effort. Unlike NLP, Machine Learning, or AI, when you view source data in DataScava, it clearly shows which topic key terms contribute to one or more topic scores because they are highlighted and color-coded to the topics, while those that do not contribute are not. This is how errors are identified, and users improve the accuracy of the DataScava Topics on an ongoing basis. Just as businesses evolve, so must ontologies. In other systems, end users are often in the dark about why a snippet of input data results in specific output.

Classifier

One of the most labor-intensive parts of training and deploying machine learning and AI systems involves cleansing and classifying raw unstructured textual data. DataScava works directly with messy data and so does not require cleansing to successfully classify, tag, and label it using DSLP, WTS, and TTT. Furthermore, documents not containing defined domain-specific topics can be ignored after classification, so the need to cleanse data is lessened.

DataScava Highlighter uses this classification data to empower human-in-the-loop (HITL) verification of training data and to improve the accuracy of the taxonomy topics and their key terms on an ongoing basis. In addition, by automating the classification process, DataScava can be used during the training process and everyday system operation to assess output.

Output

Machine Learning or AI users often find they do not understand what the system did, why it did it, and whether it gave the correct results. DataScava doesn’t attempt to create output in a black box hidden internal process — instead, it empowers users to identify any subset of the raw data they’re specifically interested in. While intelligent systems replace human effort, DataScava informs and empowers it by providing the logical analytical scaffolding and boundaries to keep the human in command.

Taxonomies for Financial and Technology Domains

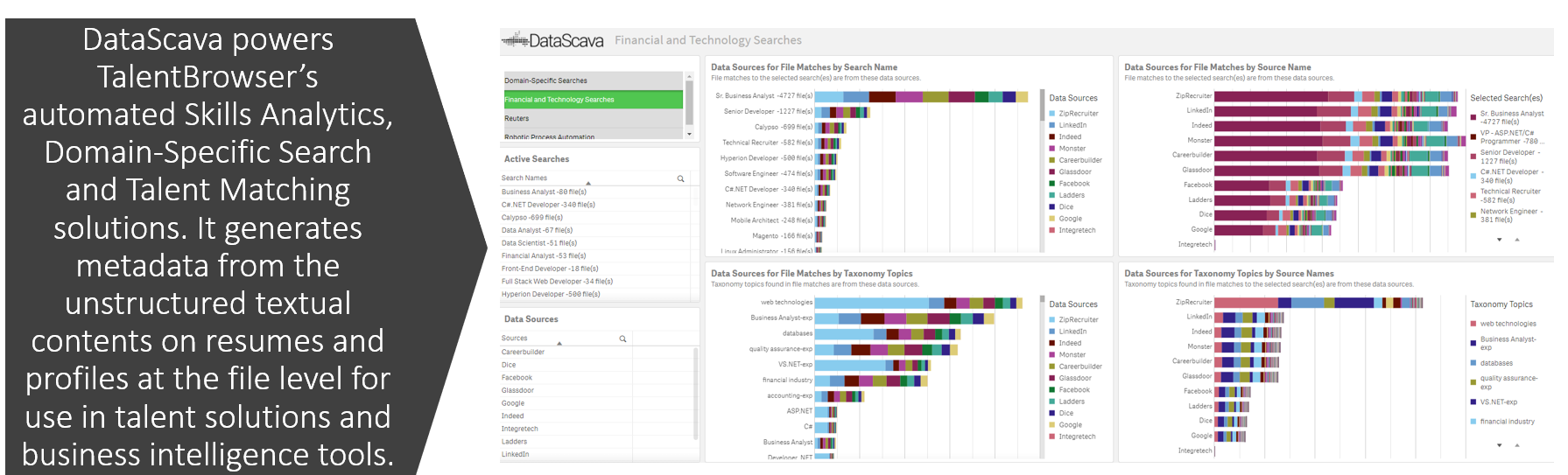

Taxonomies for Talent Matching and Skills Analytics