Check out this article written by our CTO John Harney about how DataScava mines unstructured textual data using our Domain-Specific Language Processing and patented Weighted Topic Scoring:

Real-time mining of unstructured textual content isn’t simple. Available solutions don’t work well unless they’re fine-tuned to meet your specific needs and address the unique quirks in your company’s information. To truly add value, your applications, whether AI-driven or not, require a vocabulary that captures the definitions, context and nuance of your business.

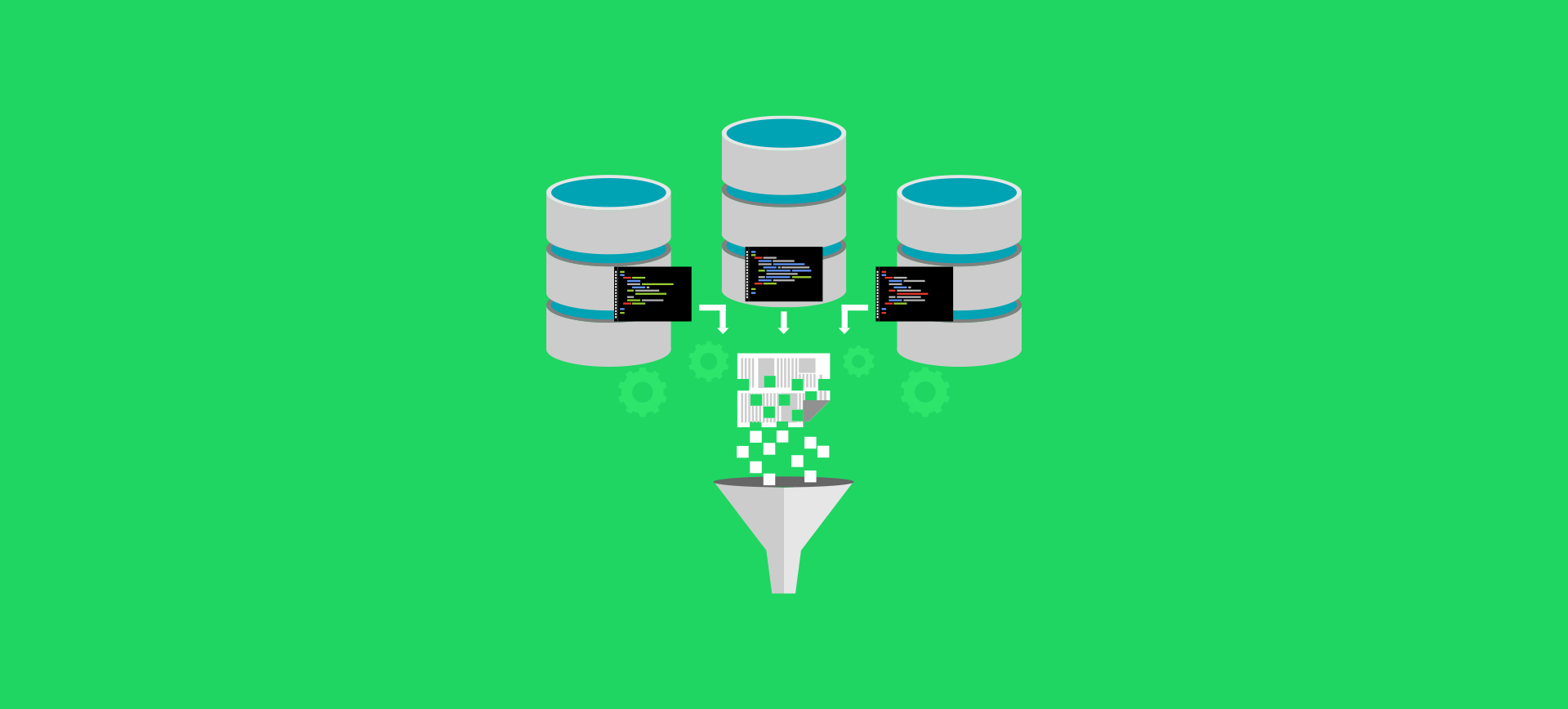

DataScava’s unstructured data miner provides fast and effective solutions for leveraging the explosive growth of unstructured data. The sheer volume of information—even some small businesses must manage thousands of pages each day—challenges companies to find the most efficient and accurate way to read data, index it, extract what they need and quickly route it to its proper destination.

Conventional wisdom says that taking full advantage of information requires a “data-driven system” based on “artificial intelligence, “machine learning” or some other application. But without modification, these technologies often process information quickly, but incorrectly. Needless to say, machines that use inaccurate data for machine learning, AI insights, business decisions, or that pass data to the wrong destination, don’t do anyone much good.

That’s where DataScava comes in.

Power from Unstructured Data

DataScava’s proprietary search engine extracts actionable, highly precise and industry-specific information from the content you already have. Our technology is data-agnostic and provides a true competitive advantage that maximizes data’s transformative power.

Simple to use and transparent, DataScava is accessible to a wide range of technical and non-technical users. It encourages cross-discipline collaboration and facilitates a faster path to improved efficiency and productivity. It also automates time-consuming data preparation, positively impacting your bottom line.

Leveraging their own subject matter expertise, users can easily set DataScava’s filters. They then work around the clock, continually increasing the system’s capabilities and providing measurable benefits. Our solution can stand on its own, integrate with other systems or curate the data needed for AI and machine learning projects. However you employ it, DataScava provides an automated, simple and precise way for business people and data professionals to search, index, score and match unstructured textual content.

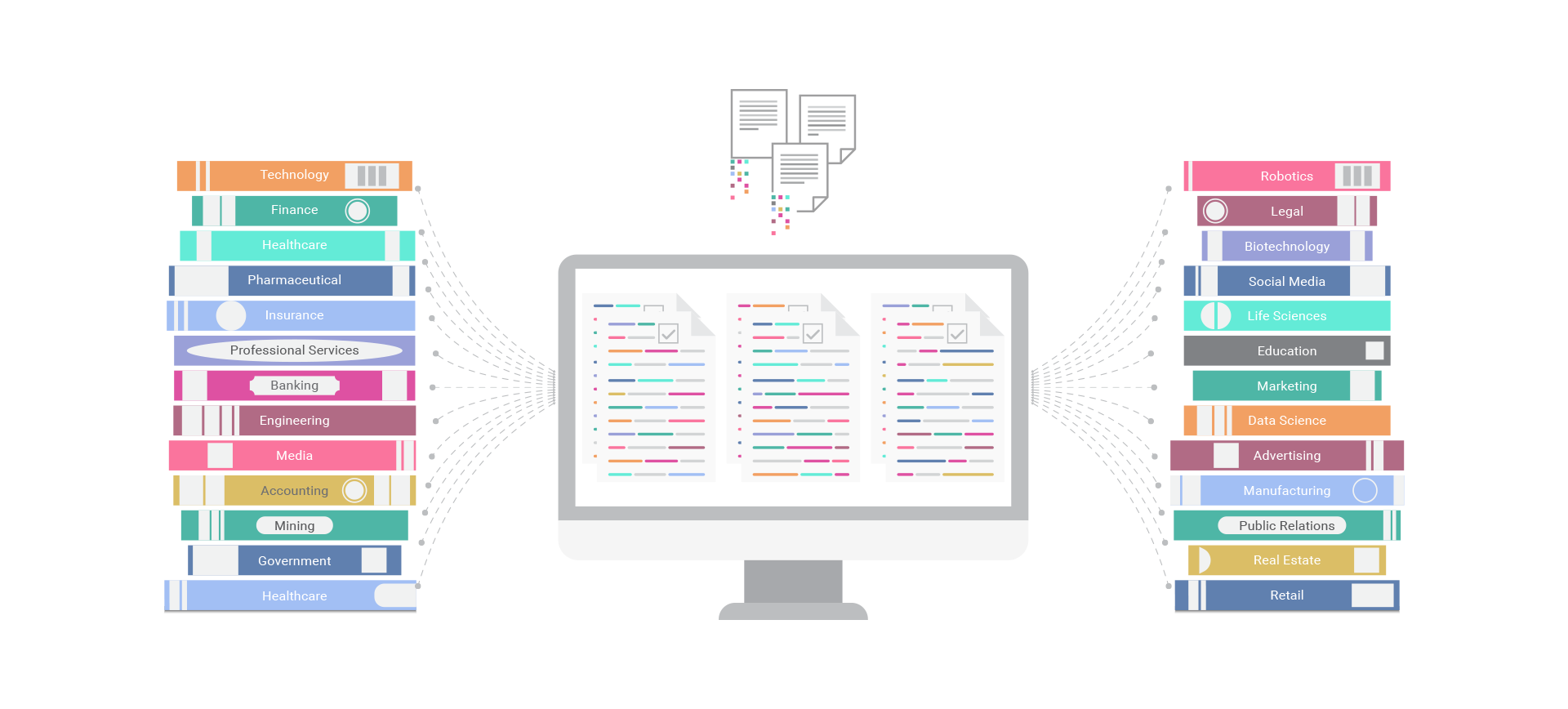

Domain-Specific Language Processing and Weighted Topic Scoring

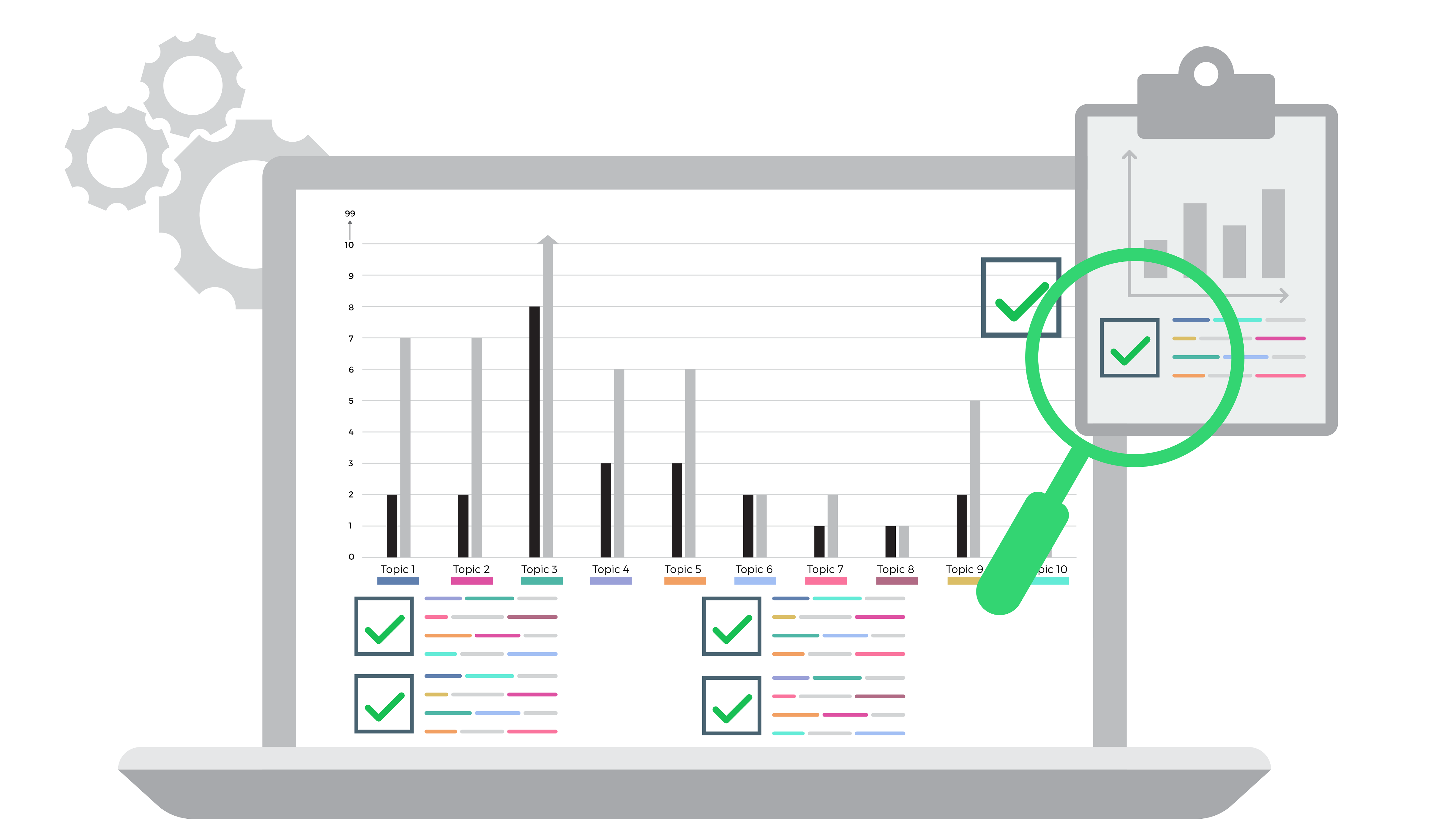

DataScava is the only product to offer Domain-Specific Language Processing with our patented Weighted Topic Scoring, which provide highly precise results you can see, control and measure. Users select topics of interest, weight their significance, adjust them on-the-fly and rank the resulting output. They can then hone in further, using multi-level sort to drill down and surface key results while the system automatically mines and matches new data as it arrives. Search templates, editable topic libraries, percentile rankings and “not” capability allow them to extract the exact data they need.

Domain-Specific Language Processing provides a powerful alternative to Semantic Search and Natural Language Processing. It’s also more effective than Boolean search because users can prioritize their search criteria, automate it and perform “what-if” analysis. That’s especially important today, when most users struggle with even basic queries. As Marti A. Hearst wrote in her book Search User Interfaces, “studies have shown time and again that most users have difficulty specifying queries in Boolean format and often misjudge what the results will be.”

Data Curation With Your Own Vocabulary

Out of the box, AI systems act much like new graduates starting their first job: Their vocabulary includes no real knowledge of how the business works. They don’t account for nuance or context. Starting up, they’re only as effective as their “education” has prepared them to be.

Consider Wordnet, a “large lexical database of English” developed at Princeton University. The system groups words into sets of “cognitive synonyms” that share a discrete concept. According to its creators, Wordnet links not just words, but specific senses of words. That allows it to deliver narrowly defined results, grouped in ways that go beyond the simple semantic relationships found in a thesaurus.

It sounds impressive. But is it practical? Searching “financial” on Wordnet returns just one result: “fiscal.” That’s certainly narrow, but is it useful? What about “finance,” “monetary,” or “economic?” Wordnet keeps all of those possible matches on the shelf. By comparison, our financial topic has over 250 associated keywords.

In DataScava, all topics and keywords can be easily viewed, edited, renamed, deleted or added to by any user. As a result, your own specialist nomenclature may be adopted across all of your systems, whether they’re AI-driven or not.

But that’s simply a foundation block. As implementation begins, we partner with you to create company-specific topics, their keywords and a customized library of search templates. “Weighted Topic Scoring” returns precise matches of existing, new or modified data automatically. In addition, DataScava indexes the textual content of your files in real-time, generating metadata such as topic scores, percentile rankings and data tags.

Better Results in a Straightforward Way

DataScava also addresses the logic and flexibility challenges posed by the current state of AI. For example, Natural Language Processing systems have been trained to recognize, derive meaning from and respond to specific words and phrases. However, they may ignore words they don’t recognize or misinterpret ones they do. And while unstructured data rarely appears in standard form, NLP is based on the standard use of language. But the grammar for natural languages is ambiguous and typical sentences have multiple possible analyses. Without extensive programming, it can’t process code words, jargon and unpredictable changes to sentence structure.

Understanding how language is used in the real world is critical to accurate parsing and deriving correct meaning. An NLP system might know the word “options” and the location of “Summit, N.J.,” but still be challenged to process “The Summit Derivatives Trading System suffered an outage in New Jersey, causing massive losses in Index Options.”

DataScava solves such issues with a rich library of domain-specific topics, fine-tuned to your needs during implementation. Users can constantly correct and adjust the model without bastardizing the system’s vocabulary. Instead, their input enhances it.

We Keep the Human in Command

In essence, NLP is a translator that uses fuzzy logic to identify known constructs and phrases, then substitutes more complex phrases to give the system explicit instructions. At the same time, it removes a cornerstone of human language: nuance. “The blood-red sun birthed a new day as it rose over the horizon” becomes “the sun rose.”

That kind of translation is appropriate for relatively simple tasks, such as automating a phone attendant’s call routing. However, it’s not particularly useful for processing the more complex undertakings human beings perform every day.

For a system to complete more-than-basic tasks, it requires the addition of human subject matter expertise. That means incorporating additional specialist words and phrases of interest into the dictionary. Usability issues aside, Boolean phrases may or may not be relevant, can suppress useful results and create output that’s either too broad or too narrow. Despite its use of sophisticated search terms, adding keywords doesn’t necessarily improve results. It simply bastardizes the NLP vocabulary and may negatively impact the information produced by the AI “black box.”

You have to wonder —if there’s an “intelligent” process within AI that “understands what you mean,” and AI applies further “intelligence” to it—how that logic incorporates these added conditions? Do these exceptions make the system more intelligent or do they hamper its operation? If the user adds a word such as “summit,” as referenced above, to mean “Summit trading system,” does the AI engine always assume “summit” is linked to financial trading? What does it do with phrases such as “they reached the summit of the mountain” or “the heads of government held a summit to discuss their options.”?

Can these issues be addressed? Of course. But doing so usually involves modifying the AI model—an expensive effort given the amount of time and money required. And even with training, many users won’t ever understand the way NLP “thinks” or how new keywords can impact a Boolean search. The potential results include inefficiency, lost productivity and relying on incorrect insights to make ineffective decisions.

Why DataScava Makes Sense

DataScava addresses these concerns by simplifying the user experience and powering search results that are more accurate in every sense of the word.

- As it works DataScava finds, measures and highlights references based on your interests and priorities. Those references can be tweaked as desired in real time.

- Over time, DataScava’s white box allows you to develop and maintain your own nomenclature, a vocabulary that’s specific to you, remains proprietary and can be used across other systems as necessary. As you deploy new systems, there’s no reason to repeat their training.

- DataScava eliminates the need for continual manual curation, evaluation and costly reprogramming. It works automatically to mine your data based on criteria you control.

- Our results can be audited. Unlike the typical AI “black box,” DataScava allows users to see precisely why it produced a given outcome in any situation.

- DataScava serves your interests alone. In most cases, deploying an AI system involves training a vendor’s product, which can then be resold to your competitors. With DataScava, your intelligence stays in-house and constantly improves.

To work properly, AI systems require a tremendous amount of standardized, labeled and otherwise “structured” data. Yet by 2022, more than 90 percent of the world’s electronic information will be unstructured, delivered in business reports, research papers, emails, user comments—all written in different styles and using terms whose meaning differs from sector to sector.

DataScava bridges that gap by creating high quality input that artificial intelligence and machine learning systems can use to improve the quality of their own output. That increases the accuracy of searches, reduces the risk of inappropriate analysis and badly informed decisions, and increases your data team’s efficiency. With no black box, it generates the kind of auditable output that helps compliance officers sleep better.

By extracting industry-specific information, DataScava makes unstructured data more accessible, more understandable and, above all, more useful.